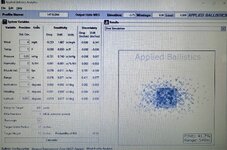

Im curious to know but does the accuracy dispersion start to matter more when you shrink the target size down? Say your trying to hit a 4-6 inch target at 500 would the accuracy matter more because the general dispersion starts to get larger than the target or do the other factors increase so much it still doesn't matter.

I know its not really relevant to big game hunting but long range rabbit/fox shooting possibly

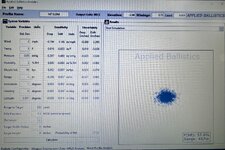

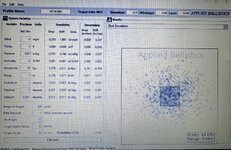

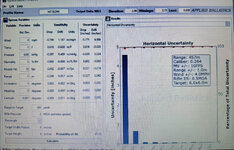

Using a standard 6.5 CM load with 147gr ELD-M at 2,700fps MV, 5,000ft DA, 6” square target, and 1.5mia, 1 MOA, and .5 MOA for all. Again, keep in mind these are 1st first hit probability in an unshot/novel environment and target.

4 mph wind caller.

1.5 MOA is 37.4%-

1 MOA is 43.9%-

.5 MOA is 47.7%-

For a 10% increase in hit rate from realistic mechanical precision of 1.5 MOA to a completely unrealistic .5 MOA.

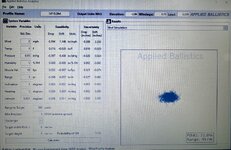

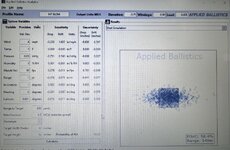

Now, the same but with a 2 mph wind caller:

1.5 MOA is 57.6% (already 10% higher than the 4mph wind caller with .5 MOA rifle)

1 MOA is 71%

.5 MOA is 79%

For a difference of 21% from 1.5 MOA to .5 MOA.

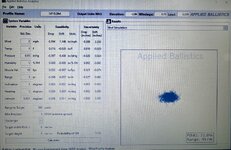

You do see more of a difference when the target is at mid ranges- 300 to 500 yards and smaller than the base cone.

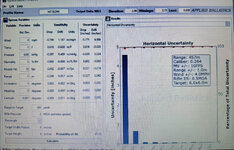

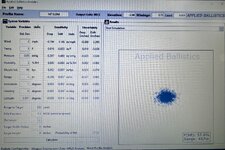

Here’s why. With a 4 mph average wind caller- which you (the colloquial “you”) aren’t in broken terrain unless you are shooting in novel broken terrain a lot- the wind is the largest source of error- not precision.

1.5 MOA causes of horizontal error. The vast majority of error is wind-

.5 MOA the vast majority of error is wind-

If you want to hit things more often, find what is producing the larger source of error

in reality, in the field- and reduce that source.