Discussions around “cold bore shifts” and “cold bore zeroes” versus “warm or hot barrel zeroes” are constant. So is the belief that barrels “walk” when they heat up or that groups open when they heat up.

After having multiple discussions,

@Ryan Avery and Jake

@Unknown Munitions and I set up a day to shoot and measure what happens. Quite a bit of discussion happened with getting everyone on the same page, and explaining the limitations and resolution that would be able to be measured. Basically- the more data, the mare accurate the results will be. However, there is a cutoff point where more rounds are being shot without really increasing resolution in the results.

Mainly we were discussing whether 10, 20, or 30 round groups should be utilized.

For best data (95% probability) 30 shot groups are required. So that would be 30 cold bore shots, and then 30 hot bore shots from each rifle. The benefit with 30 round groups is the mean point of impact (MPOI) would be very solid- there would be very little deviation between groups and any deviation beyond about .1 inch would confidently be attributed to a real, observable shift due to heat. The issue with 30 round group sizes is time required and the amount of ammunition required for the rifle being shot.

10 rounds was the minimum required to get usable data. The time and ammo expenditure would be significantly less, but the resolution would be less as well. If a rifle averaged 1 MOA for ten round groups, the center of any group could vary by up to +/- 1/3rd MOA. That is, with nothing changing from 10 round group to 10 round group, you can and will see the apparent center shift around by up to .2-.4 MOA due to ten rounds not showing the true cone.

20 rounds would split the difference with being a bit closer to 30 round accuracy than 10 round accuracy.

Ultimately it was decided that we would use 10 round groups- one 10 round group of cold bore shots, and one 10 round group of hot bore shots as a baseline, with the understanding that there can be a shift of apparent center by up to .3 MOA or so

with no change. If you shoot 10 cold bore rounds into a group, and another 10 cold bore rounds into a second group- the centers of each group will vary slightly in respect to the point of aim because 10 rounds isn’t enough to show you the true center for most rifle systems.

Due to that statistical and group reality, it was agreed that only significant and functional shifts would be noted and that was agreed to be .1 mil (.36 inches at 100 yards) or one click of the scope. Again due to limitations of ten round groups, any rifle that showed a shift of more than .36 inches from cold to hot would have another ten rounds fired to see if it was consistent.

This process would be done with ten (10) different rifles. A starting temperature was measured inside the chamber and at the end of the barrel for each rifle before starting. The rifles would be shot one round at a time round robin style, and then the rifles would be cooled to ambient temp before shooting the next cold bore shot, repeating this until 10 rounds was fired from each. The hot barrel shots would be taken as quickly as possible and the ending temp recorded.

No group reduction techniques would be allowed- every round fired counted. Mean Point Of Impact would be the center of all rounds fired in a group no matter what shape or how ugly. Group size would be noted, but has no bearing for this test. Only the difference in mean point of impact or “zero” would. So too, whether the round hit point of aim or not is immaterial, as all groups would be measured using Hornady’s Grouo Analysis tool which gives deviation from aimpoint.

The scopes would be set on the highest magnification or max 20x if they went higher. Fixed scopes were what they were.

The rifles were as follows-

1). Unknown Munituons Competition 7PRC braked, in XLR chassis with NF NX8 4-32x scope. UM ammo.

2). Tikka Varmint T3x 6.5cm suppressed, in a McMillan Game Warden 2.0 stock, with NF NX8 4-32x scope. UM ammo.

3). Unknown Munitions 6.5 SUAM Imp, suppressed, in a Manners LRH stock, with NF NX8 4-32x scope. UM. ammo.

4). Factory T3 lite 308 in Stocky’s VG stock, suppressesd, with SWFA 10x scope. Hornady Black 155gr AMAX ammo.

5). Gunwerks Nexus 6.5 PRC, suppressed, with NF NX8 4-32x. Hornady 143gr ELD-X ammo.

6). Factory Tikka T3 223, suppressed, with SWFA fixed 6x scope. UM Ammo.

7). Tikka Tac 308win in KRG Bravo chassis, braked, with Bushnell Match Pro scope. Hornady Black 155gr AMAX ammo.

8). Tikka T3 Lite 223, suppressed, with SWFA fixed 6x scope. UM ammo.

9). Sako S20 6.5 CM, suppressed, with Trijicon Tenmile 3-18x44mm scope. UM ammo.

10). Tikka M595 Master Sporter 6XC, suppressed, with Minox ZP5 5-25x56mm scope. 115gr DTAC ammo.

Results:

Each target has the 10x cold bore shots on the left, and 10x hot barrel shots on the right.

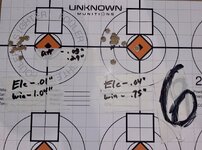

Gun #1 no shift cold to hot.

View attachment 599436

Deviation between cold and hot was .21” elevation, and .16” windage. Well inside statistical variation.

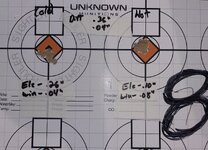

Gun #2 no shift cold to hot:

View attachment 599437

Deviation between cold and hot centers was .28” elevation, and .07” windage. Well inside statistical variation.

Gun #3 no shift cold to hot:

View attachment 599438

Deviation between cold and hot was 0.0” elevation, and .13” windage. Well inside statistical variation.

Gun #4 shifted .52” in elevation, .13” windage with an asterisk.

View attachment 599439

Somewhere around shot 5 or 7 of the hot barrel group a loud “ting” was heard, and the gun recoiled noticeably more than usual. Firing stopped, the rifle was unloaded and was checked for a baffle strike. Suppressor was fine and nothing could be found. Firing resumed with a noticeable shift down in the group following the event, and the same noticeable difference in recoil.

The next day after further shooting and checking it, it was found that the action screws had loosened substantially. Once retorqued the rifle performed as normal. No shifts could be noticed.

Gun #5 no shift cold to hot.

View attachment 599440

Cold and initial hot group were different enough that a third group was fired to confirm. The rifle just doesn’t particularly like the ammo. The third 10 round group landed smack in the middle of the first two, filling in the cone.

Gun #6 no shift cold to hot:

View attachment 599441

Deviation from cold to hot was .03” elevation and .29” windage. Well inside statistical variation.

Gun #7 no shift cold to hot:

View attachment 599442

Deviation between cold and hot was .12” elevation and .11” windage. Well inside statistical variation. The rifle does not shoot this ammo well.

Gun #8 no shift cold to hot:

View attachment 599443

Deviation from cold to hot was .36” elevation and .04” windage. Well inside statistical variation for this rifle. Of note, this was with a fixed 6x scope and these are the two best 10 round groups this rifle has ever produced. It normally is around 1.1 to 1.2 MOA for ten rounds. It also has more than 20k rounds in this barrel without ever being cleaned.

Gun #9 no shift cold to hot:

View attachment 599445

#9 started the hot group with the turret accidentally dialed .1 mil up in elevation (top right). A second hot group was fired (bottom left) and the deviation in elevation from cold to hot was .32”. and .03” in windage.

Gun #10 no shift from cold to hot:

View attachment 599446

Deviation from cold to hot was .06” in elevation and .07” in windage. Well inside statistical variation.

Cont….