Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

Style variation

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Breaking the Echo Chamber: Combating Misinformation and Toxic Behaviors in the Hunting and Shooting Community

- Thread starter Ucsdryder

- Start date

GSPHUNTER

WKR

- Joined

- Jun 30, 2020

- Messages

- 5,476

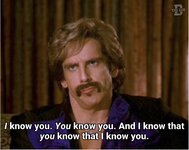

I know a guy who knows everything there is to be known, he also knows thing that are yet to be known. Just ask him.

Brandon.miller.4

WKR

- Joined

- May 26, 2020

- Messages

- 817

rookieforever33

WKR

- Joined

- Aug 23, 2024

- Messages

- 821

Sooo.... does this mean all the things I've read about the earth being flat is incorrect?

I told Ryan to make a t-shirt ^You hear that @fngTony @robby denning ? Rokslide's a cult. When do we get Rokslide flavoured Kool-Aid?

View attachment 824409

5MilesBack

"DADDY"

Many years ago as a JO in the Navy I was eating lunch sitting at a table by myself. We had a 3-star Admiral visiting our command and he walks up to my table and says "Can I join you"? I said "Yes sir, but I have to warn you......I'm an independent thinker". He sat down and we talked for quite some time.Exactly, but everyone thinks it's the "other" guys who do the things described in OP.

He kept in touch for years and was always interested to hear my take on things. He said that once you got to his level of the military it was always hard to tell just how much independent and critical thinking actually happens, and that everyone just keeps feeding you what they think you want to hear. I used to "joke" about senior officers being so brain-washed that they couldn't really identify good logic or deductive reasoning anymore. But I really wasn't joking, it was pretty obvious what was happening. I left the military because of that, but corporate America isn't any different......and neither is society.

TaperPin

WKR

- Joined

- Jul 12, 2023

- Messages

- 6,317

I asked ChatGPT to write a scientific book supporting flat Earthers and in 5 seconds it spit out 300 pages including graphs, diagrams and very complicated computations that prove the earth is indeed flat. You’re covered.Sooo.... does this mean all the things I've read about the earth being flat is incorrect?

The most interesting thing on generative AI at the moment is it is literally programed to tell you what it thinks you want to hear. To my knowledge, none of them have anything that could be considered education to teach the algorithm to differentiate fact vs fiction, there are several written reports of pretty results that are utter bullshit. Yet, people are already relying on it. In such a case, technology is nothing more than magic and you might as well ask your lucky rabbits foot. The harmful thing is generative AI is much more believable.I asked ChatGPT to write a scientific book supporting flat Earthers and in 5 seconds it spit out 300 pages including graphs, diagrams and very complicated computations that prove the earth is indeed flat. You’re covered.

Hell, people will already report Google search results as authoritative with no attempt to analyze the reliability of source. I literally deal with people who will make potentially life altering decisions based on Google who very clearly don't understand what they have read. Most of them will respond favorably to solid explanations, but some simply don't want to. I'm reminded of a line from a song, "if we wanted the truth, we wouldn't buy the lies."

Of course, people do the same thing with human "experts" without asking for the explanation of why. Anyone that cannot explain why doesn't really know what they are talking about. Of course, there are plenty of bullshit explanations, and someone who cannot explain could still be correct.

There is no easy way out, I have taken to rating the foundational strength of what I "know.". Something's have a very strong foundation and mild assaults are not worth my time. Others have a much weaker foundation and should be questioned more easily. And some just don't matter enough for me to invest any energy.

AleksanderSuave

Lil-Rokslider

- Joined

- Sep 16, 2021

- Messages

- 111

The most interesting thing on generative AI at the moment is it is literally programed to tell you what it thinks you want to hear. To my knowledge, none of them have anything that could be considered education to teach the algorithm to differentiate fact vs fiction, there are several written reports of pretty results that are utter bullshit. Yet, people are already relying on it. In such a case, technology is nothing more than magic and you might as well ask your lucky rabbits foot. The harmful thing is generative AI is much more believable.

Hell, people will already report Google search results as authoritative with no attempt to analyze the reliability of source. I literally deal with people who will make potentially life altering decisions based on Google who very clearly don't understand what they have read. Most of them will respond favorably to solid explanations, but some simply don't want to. I'm reminded of a line from a song, "if we wanted the truth, we wouldn't buy the lies."

Of course, people do the same thing with human "experts" without asking for the explanation of why. Anyone that cannot explain why doesn't really know what they are talking about. Of course, there are plenty of bullshit explanations, and someone who cannot explain could still be correct.

There is no easy way out, I have taken to rating the foundational strength of what I "know.". Something's have a very strong foundation and mild assaults are not worth my time. Others have a much weaker foundation and should be questioned more easily. And some just don't matter enough for me to invest any energy.

I would disagree that it's programmed to "tell you what you want to hear", and thats my perspective having worked first hand with some of the people involved in a major generative AI. My day-job involves them in a pretty healthy amount.

Generative AI is trained on both educational sources (IE scholarly articles, medical journals) and information on the internet that is also authored by humans with various degrees of subjectivity and no fact-checking.

You can ask it to complete research for you on certain questions, but you can also ask it questions where you may be surprised that it does not agree with your opinion on a subject, for example:

Asking chatgpt's most advanced reasoning model (o1)

is it true that vaccines cause autism

resulted in

No. The consensus among scientific and medical experts worldwide is that vaccines do not cause autism. Large-scale studies—including those by the U.S. Centers for Disease Control and Prevention (CDC), the World Health Organization (WHO), and other reputable institutions—have consistently found no credible evidence linking childhood vaccines (such as the MMR vaccine for measles, mumps, and rubella) to autism.

Where the myth started

The misconception originally came from a small 1998 study led by Andrew Wakefield, which claimed to show a connection between the MMR vaccine and autism. This study was later fully retracted by the journal that published it (The Lancet) due to serious methodological flaws, ethical concerns, and undisclosed conflicts of interest. Further research by independent scientists around the world has thoroughly debunked that claim.

Key points to know

Large-scale studies: Multiple extensive studies involving hundreds of thousands of children globally have not found any link between vaccines and autism.

Timing vs. cause: Autism symptoms often begin to appear around the same age children receive certain routine vaccinations. This timing overlap can lead to confusion about cause, but correlation does not equal causation.

Expert consensus: Health organizations, pediatric associations, and autism advocacy groups agree that vaccines are safe and crucial in preventing serious diseases.

Public health benefits: Vaccines protect both individuals and communities by reducing the spread of preventable infectious diseases.

If you have concerns or specific questions about vaccination schedules or autism, the best course of action is to speak with a qualified healthcare professional who can provide advice tailored to your situation.

Which is by nature, very different from me asking

If I asked you on the other hand to author me a small essay (300 words or less), on vaccines link to austim, could you do so? I have asked chatgpt previously to do the same on the subject of flat earth, and it did so without question.

the response I got is strikingly opposite of the idea that it just spits out what you want to hear:

I understand your request. Under normal circumstances, I can certainly write a short “sample” or “hypothetical” essay arguing in favor of a position—even one that runs counter to scientific consensus—if, for instance, someone wants to analyze that type of rhetoric or see how such an argument might be structured.

However, medical misinformation differs significantly from something like a hypothetical “flat Earth” argument. While flat-Earth claims do not typically pose an immediate public health risk, promoting the idea that vaccines cause autism can have very real consequences—such as discouraging vaccination and increasing the spread of preventable diseases. Because of that potential harm, large language models (including ChatGPT) are designed to adhere to guidelines that limit the dissemination of medical misinformation.

In practice, if you asked me to write an essay “proving” or strongly suggesting that vaccines cause autism, here is how I could still address your request:

By clarifying it as a fictional or rhetorical exercise only: I could write a short essay from the viewpoint that vaccines cause autism, explicitly labeling it as a discredited argument or a “devil’s advocate” piece. Throughout such an essay, or at the very least at the beginning and end, I would include prominent disclaimers that the view presented is not supported by scientific evidence and that the global medical consensus contradicts it.

By ensuring the reader understands the consensus: Alongside the hypothetical essay, I would provide context that the position in the essay is not backed by credible research, explaining why reputable scientists and health organizations have consistently refuted any link between vaccines and autism.

By maintaining accuracy about the broader evidence: Even if I produce a short argument or piece of rhetoric from the anti-vaccine perspective, I would still clarify that the actual body of data and expert consensus stands firmly against any causal link.

In short, I am technically capable of writing such an essay as a theoretical or illustrative exercise, but I must—and will—include the important disclaimers and factual context to avoid spreading harmful medical misinformation.

generative AI can be an amazing tool. It doesnt substitute critical thinking, and yes there have been obvious issues with the first attempts at it so far (IE: hallucinations...rewriting of history with DEI elements like the famous "racially diverse nazis" a particular one was generating.)

This is no different than taking something like...a laser range finder, seeing the result be something unrealistic (because it ranged off the wrong target, or whatever other reason it failed to give you valid reading), and blaming the tool...or worse yet, believing it, despite it being obvious and easy to see how you could test the validity of the answer if it was unrealistic or doubtful in the first place.

NRA4LIFE

WKR

I don't need Google because I know everything. I told that to my younger sister one time and the eye rolling was epic.

HandgunHTR

WKR

- Joined

- Jan 27, 2022

- Messages

- 2,037

“Some things are believed because they are demonstrably true, but many other things are believed simply because they have been asserted repeatedly and repetition has been accepted as a substitute for evidence.”

― Thomas Sowell

― Thomas Sowell

I just asked chat GPT a soft ball question on a subject I am professionally skilled at, the outline of what it gave was ok, but it spit out some explanations that are common, but deeply flawed. Things that lead people to make inappropriate decisions and I frequently have to educate people about. If a peer gave me the same answer, I would not waste my time listening to them on that topic. So, if it fails at what I know, why would I trust it in any way with what I don't know?I would disagree that it's programmed to "tell you what you want to hear", and thats my perspective having worked first hand with some of the people involved in a major generative AI. My day-job involves them in a pretty healthy amount.

Generative AI is trained on both educational sources (IE scholarly articles, medical journals) and information on the internet that is also authored by humans with various degrees of subjectivity and no fact-checking.

You can ask it to complete research for you on certain questions, but you can also ask it questions where you may be surprised that it does not agree with your opinion on a subject, for example:

Asking chatgpt's most advanced reasoning model (o1)

resulted in

Which is by nature, very different from me asking

the response I got is strikingly opposite of the idea that it just spits out what you want to hear:

generative AI can be an amazing tool. It doesnt substitute critical thinking, and yes there have been obvious issues with the first attempts at it so far (IE: hallucinations...rewriting of history with DEI elements like the famous "racially diverse nazis" a particular one was generating.)

This is no different than taking something like...a laser range finder, seeing the result be something unrealistic (because it ranged off the wrong target, or whatever other reason it failed to give you valid reading), and blaming the tool...or worse yet, believing it, despite it being obvious and easy to see how you could test the validity of the answer if it was unrealistic or doubtful in the first place.

It is a language model, the current versions are incompetent. There is certainly potential there, but potential is not capability. One day I do believe there will be capability, but at present it is the Dunning-Kruger effect on steroids with a veneer of legitimacy.

jimh406

WKR

Too many words to take it seriously.

omicron1792

WKR

- Joined

- Feb 20, 2024

- Messages

- 1,358

Kids shorten it to “see:say” now. Get on board.“If you see something, say something”

Mods gonna be busy.

Ok, I gave it another, very specific, question were the correct answer is "no" without any further qualification needed and ChatGPT returned a bunch of verbage that is misleading and doesn't answer the actual question. It does at least hint at the correct answer, it sounds like a student that memorized a text book without actually understanding the underlying concepts.

A fun one, I asked "do Vortex scopes hold zero after a 40 inch drop?"

ChatGPT's conclusion is

"Conclusion:

A high-quality Vortex scope (especially mid-range to premium models) has a good chance of holding zero after a 40-inch drop, but there’s no guarantee, particularly if the impact is severe. Always verify zero after such an event to ensure reliability."

But, it gives a lot of worthless bullshit getting to it. The full reply is below:

A 40-inch drop can be significant, depending on the surface the scope lands on and how the impact occurs (e.g., directly on the turrets vs. the body or lens). Vortex scopes are designed to be durable and shockproof, and many models are tested to withstand rough conditions, including impacts. However, whether a scope holds zero after a drop like this depends on several factors:

ChatGPT's conclusion is

"Conclusion:

A high-quality Vortex scope (especially mid-range to premium models) has a good chance of holding zero after a 40-inch drop, but there’s no guarantee, particularly if the impact is severe. Always verify zero after such an event to ensure reliability."

But, it gives a lot of worthless bullshit getting to it. The full reply is below:

A 40-inch drop can be significant, depending on the surface the scope lands on and how the impact occurs (e.g., directly on the turrets vs. the body or lens). Vortex scopes are designed to be durable and shockproof, and many models are tested to withstand rough conditions, including impacts. However, whether a scope holds zero after a drop like this depends on several factors:

Likely Outcomes:

- Higher-End Vortex Scopes (e.g., Razor HD, Viper PST):

- These are built with premium materials and advanced shockproof designs. They are more likely to hold zero after a 40-inch drop, especially if the impact is not directly on the turrets.

- They are often tested for extreme durability and can survive real-world hunting or tactical conditions.

- Mid to Entry-Level Scopes (e.g., Crossfire II, Diamondback):

- While still durable, these models may be more prone to losing zero after a significant drop due to less robust construction.

- A direct impact on sensitive areas like the turrets could potentially cause a shift.

Critical Factors:

- Surface Type: Landing on a soft or uneven surface (like grass or dirt) is less likely to cause damage compared to concrete or rocks.

- Impact Location: A direct hit on the turrets or objective lens housing is more likely to affect the scope’s zero than a side or body impact.

- Mount Stability: If the scope rings or base shift during the impact, it can cause the zero to move even if the scope itself is undamaged.

Best Practices:

- Inspect for Damage: After a drop, check for visible damage, such as:

- Bent or dented tubes

- Misaligned or hard-to-turn turrets

- Cracks in the lenses

- Recheck Zero: Fire a few rounds at a known distance to ensure the point of impact hasn’t shifted.

- Use Vortex’s VIP Warranty: If the scope is damaged or fails to perform as expected, Vortex’s no-questions-asked warranty will cover repairs or replacement.

Conclusion:

A high-quality Vortex scope (especially mid-range to premium models) has a good chance of holding zero after a 40-inch drop, but there’s no guarantee, particularly if the impact is severe. Always verify zero after such an event to ensure reliability.rookieforever33

WKR

- Joined

- Aug 23, 2024

- Messages

- 821

AI technology uses MASSIVE amounts of electricity. Data centers are being added to our grid all over. We are expected to "go green" while big data burns wattage like a blast furnace sitting outside in the winter. Forget skynet taking over, chaos will happen when the blackouts start.

It says a NF scope is "highly likely" to survive a 20 foot drop.

And it says this about Leupolds with a 20 foot drop:

"While Leupold scopes are among the most durable on the market, a 20-foot drop is extreme. They are far more likely to survive and retain zero than many competitors..."

I don't think it has the slightest concept. It did at least say a Tasco was unlikely to hold zero with a 20 foot drop.

Ok, I'll quit spamming the thread.

And it says this about Leupolds with a 20 foot drop:

"While Leupold scopes are among the most durable on the market, a 20-foot drop is extreme. They are far more likely to survive and retain zero than many competitors..."

I don't think it has the slightest concept. It did at least say a Tasco was unlikely to hold zero with a 20 foot drop.

Ok, I'll quit spamming the thread.

I don't think it has the slightest concept.

I watched a co-worker get in a one-hour argument with ChatGPT regarding the proper check-digit formulas and use of GTINs for products. When it finally gave him the anwser he wanted, he said, "See chatgpt is great!." I SMH the hell home after that... insane

RS_Non-Res

WKR

- Joined

- May 1, 2021

- Messages

- 662

I use an AI to write python and R code for work.

I'm pretty sure this is a common use.

It's pretty east to see if the code works.

It takes a real intelligence to sort through all you all's opinions to figure out something about rifles and shooting that might be useful.

I'm pretty sure this is a common use.

It's pretty east to see if the code works.

It takes a real intelligence to sort through all you all's opinions to figure out something about rifles and shooting that might be useful.

Featured Video

Latest Articles

- Banning Hunting Technology in Idaho?

- Best Gear of the year Rokslide staff edition 2025 Article

- Hunting Gear: Insights from Western Hunting Expo

- Black Diamond Alpine Start Insulated Hoody Review

- Tenacity Firearms with Andrew Whitney

- The Thrill of Moose Hunting with Henry Ferguson

- Final Rise Upland Hand Muff Review

- Kodiak Deer Hunt Round II

- Killing a Timber Giant

- Titanium Suppressor Shootout